February, 2018 (Relativity 9.5)

February, 2017 - Present

PM: Andrea Beckman, Jim Witte

TPM: Elise Tropiano, Trish Gleason

User Experience Designer

User Research

Low Fidelity Wireframes

High Fidelity Mockups

Interactive Prototypes

Usability Testing

Style Guidelines & Final Assets

The Active Learning product has been in various stages of definition and development for the broader part of two years. I have had the good fortune of being the lead designer on the project for that entire time.

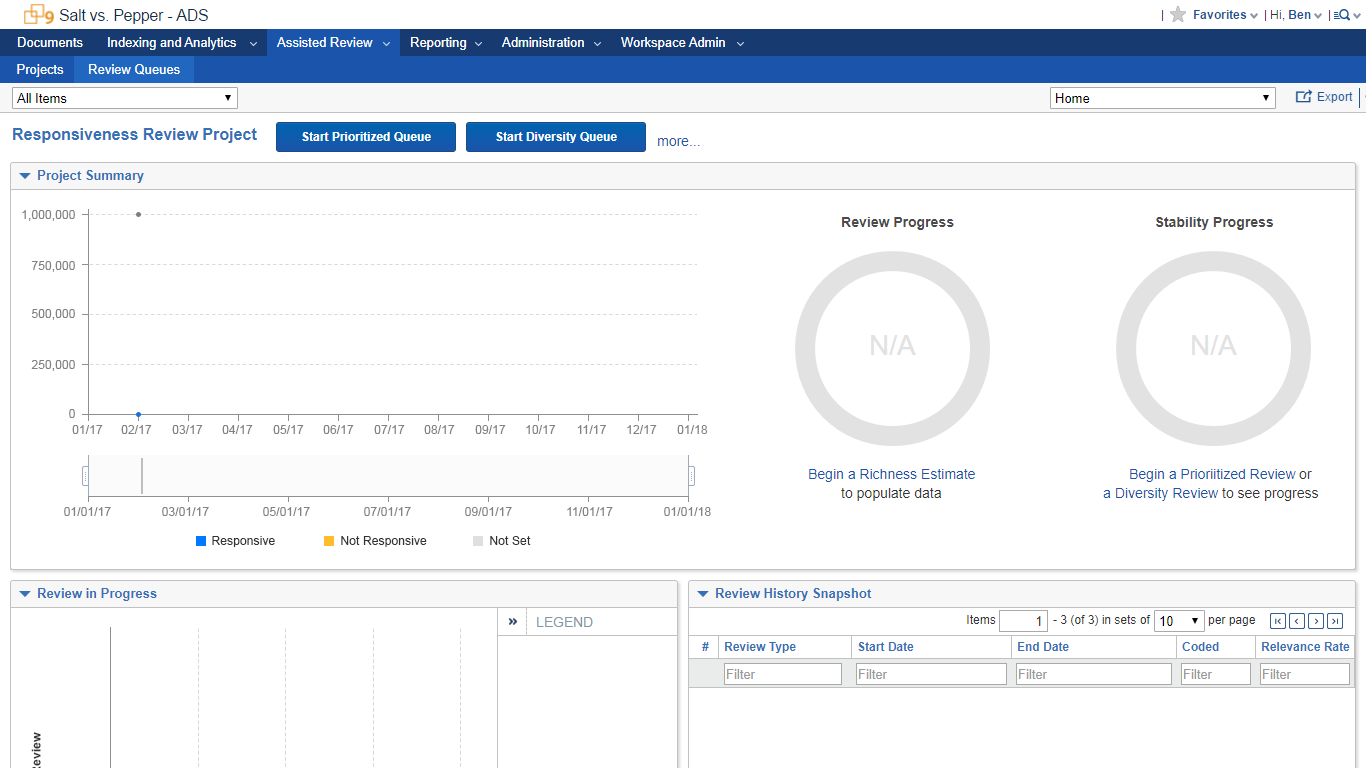

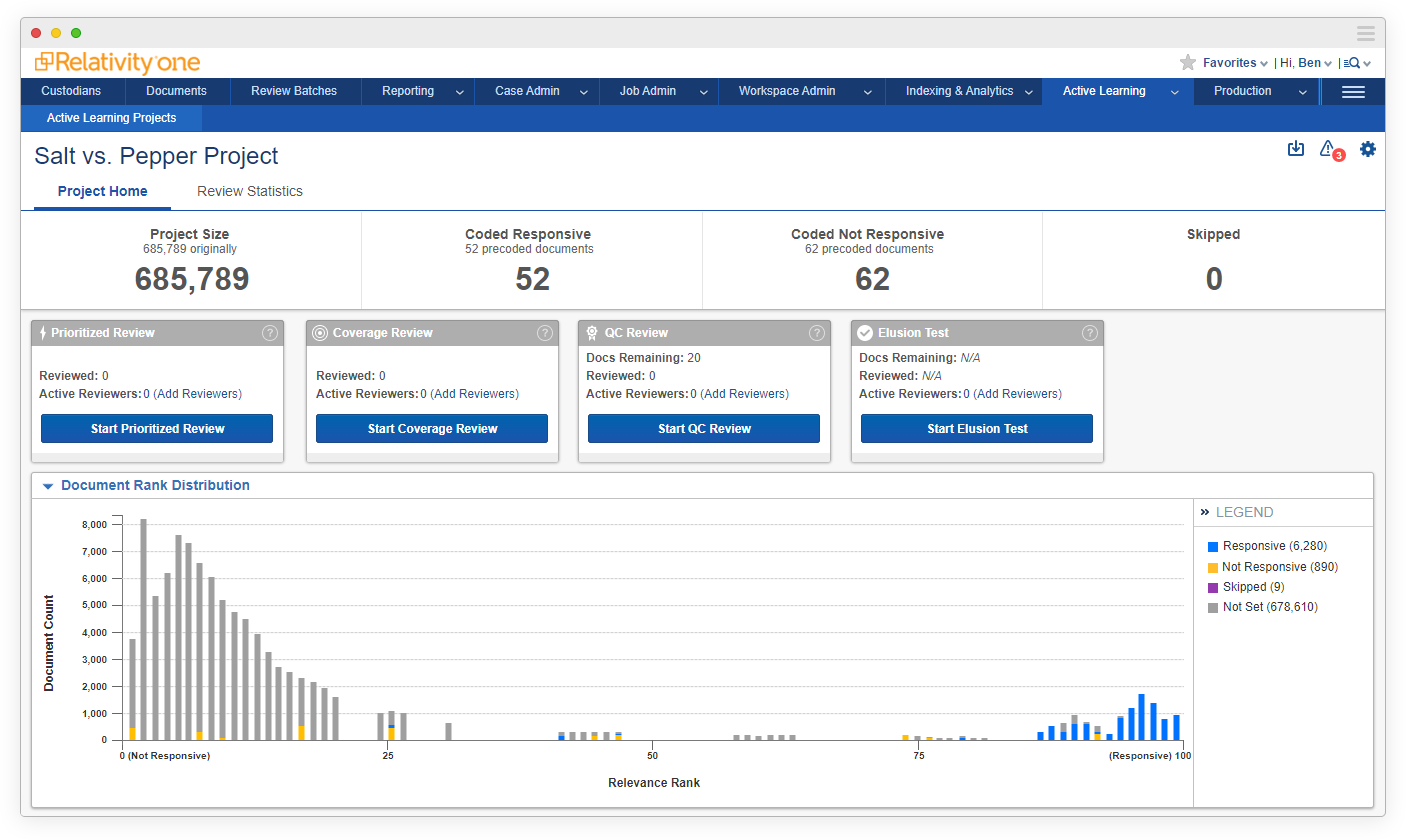

Active Learning is a technology assisted review (TAR) process where the system serves up a continuously updated queue of documents to review, ensuring that the highest ranked (most responsive) documents are presented first.

From the onset, we set out to solve three problems:

Over the course of the past two years, we have built and released a product that is already having a profoundly positive impact on our users and our industry.

Director of Client Services, H&A eDiscovery

One of the primary pieces of feedback we heard while interviewing users—and we heard it a LOT—was that our previous generation TAR product was too difficult to setup and maintain. “It’s too clicky!” they would say, and they’re right.

With that in mind, we set out to streamline the setup process and reduce the overall number of cliks required anywhere we could. We achieved this a variety of ways:

We automated as much as we could to reduce the friction of the setup process. One name, four selections and you’re on your way. As a point of comparison, the previous TAR product had 19 fields to fill out.

Similar to project setup, we eliminated any superflous, unnecessary inputs or actions from starting a review queue while still keeping the product feature rich.

Canadian Law Firm

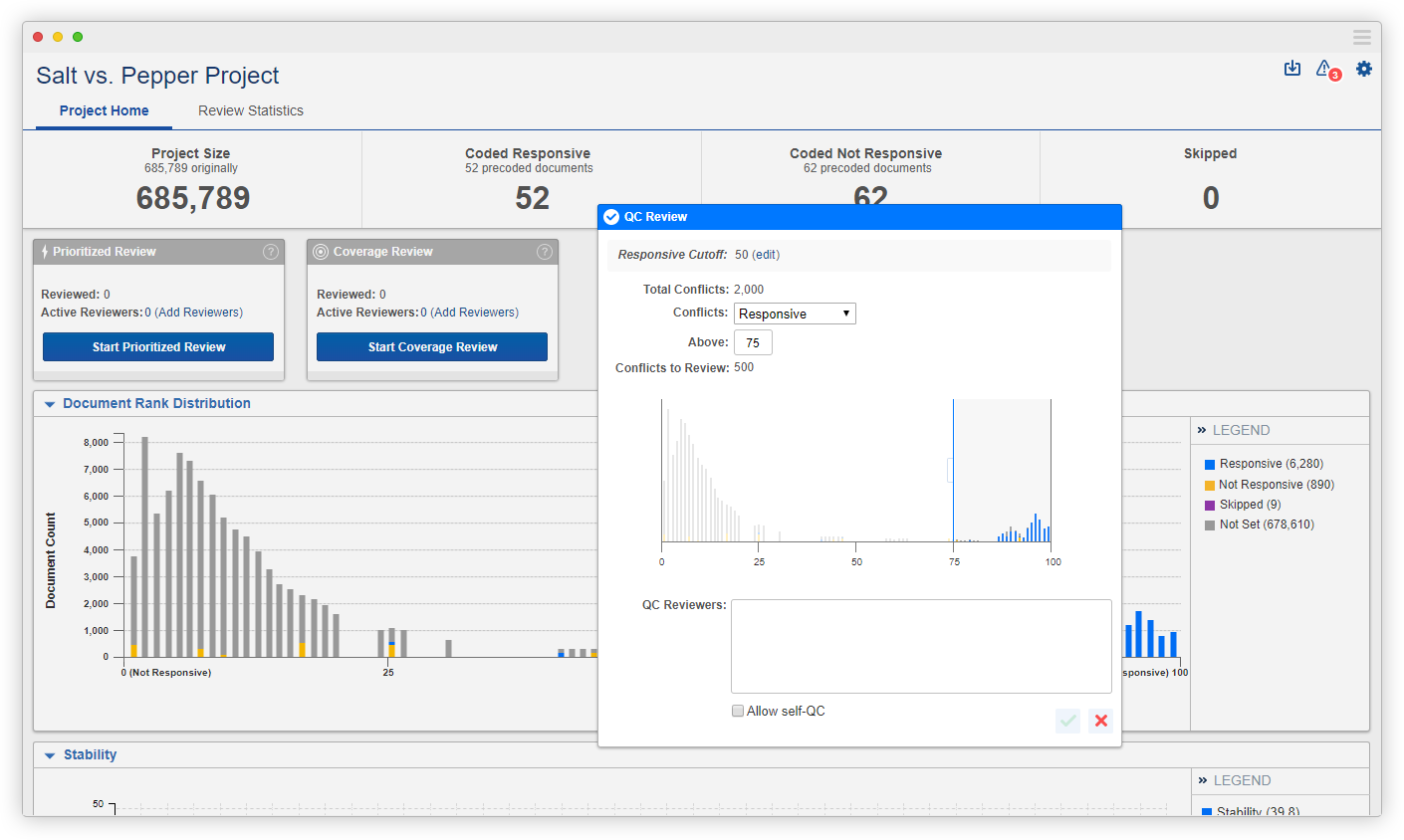

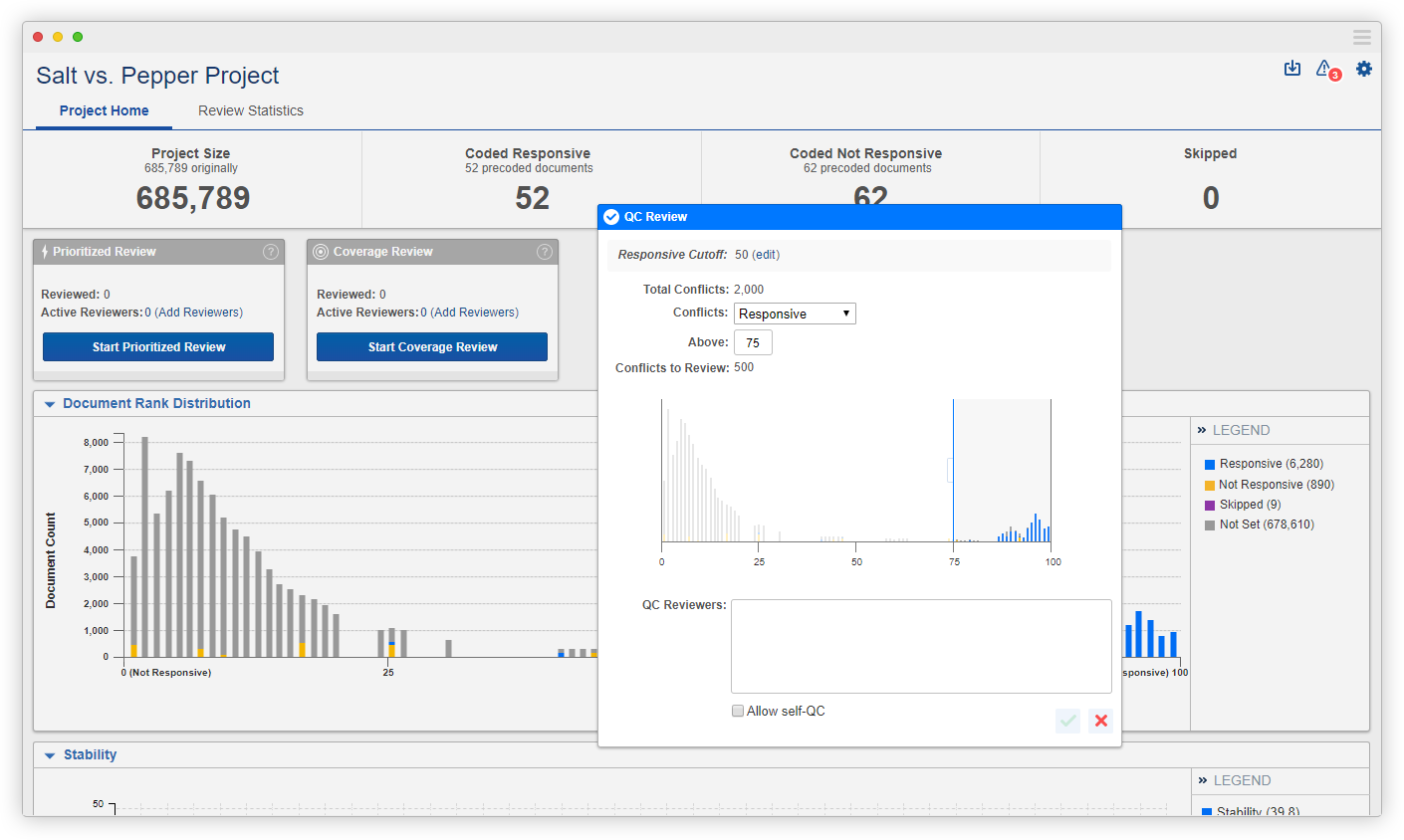

Whether you need a quick update on project progress, or are doing a deep dive into review statistics, both tasks are easily accomplished. Project Home provides high level stats and visualizations to quickly inform a user of the state of the project. Review Statistics captures and logs all useful historical data pertaining to each review and reviewer.

An Active Learning project can end for any number of reasons and in any number of ways, but the most defensible way is by running an Elusion Test. Upon completion, a user is given the option to accept the results—effectively putting the project on hiatus—or resume the project for more desirable results. Regardless of the selection, nothing is permanent; all results are recorded in Review Statistics and a project can be re-started at any time.

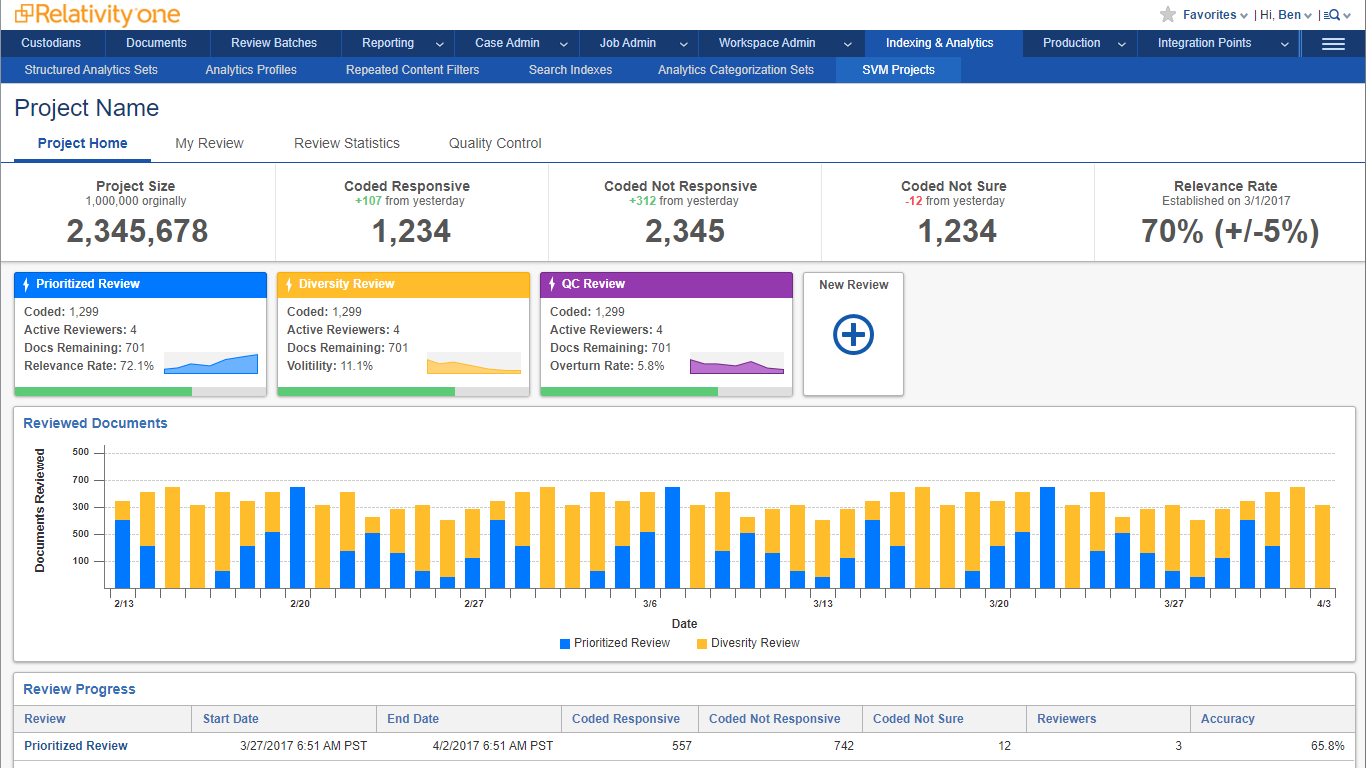

Like all good projects, Active Learning has grown and changed and matured as we've done testing, learned more, and received feedback. Thanks to an eager userbase we were fortunate enough to have a client advisory board provide feedback early and often. As you can see below, it has drastically changed the form that Active Learning has taken.

The main focus of the initial concepts was to provide guidance throughout the project (thus a wizard), differentiation between queue types, and immediate access to starting a review.

Expanding on the wireframes, the mid-fi mockups started the discussion of how we represent our data over the life of a project, and how the project itself evolves over time.

After numerous explorations, countless user interviews, and tons of feedback, the final direction of the product began to take shape. I switched to almost exclusively high fidelity prototyping at this stage.

A tremendous ammount of work has gone into this project from every discipline involved. Our hard work continues to pay off as each week more and more user success stories start rolling in. It's been a thrill to habe been able to work on this project from start to finish and see it go from scribbles on a whiteboard to a tangible thing people use to do their jobs.

The official MVP release of Active Learning was in February 2018. Since then we've continued to design and roll out features that have been in the works for a while, notably two additional queue types: Coverage Review and QC Review. Elusion Tests are just around the bend, and further down the road is concurrent queue review. Between both of those, and continuing to gather feedback and iterate, we have our hands pretty full.